Comparing Three Real-Time OLAP Databases: Apache Pinot, Apache Druid, and ClickHouse

Index

Introduction

Comparison Structure

Summary of Comparison

High-Level Architecture

Data Sources

Ingestion

Storage

Indexing Strategies

Query

Cluster Management

Security

Cloud Deployment Model

Outbound Connectors

Video

The authors of this blog gave a presentation about it at Real-Time Analytics Summit 2023.

Introduction

With Real-Time Analytics gaining popularity, we are often asked, "How do you compare Apache Pinot™ vs. Apache® Druid vs. ClickHouse®?” In this article, we attempt to provide a fair comparison of the three technologies, along with areas of strength and opportunities for improvement for each.

Recently, we’ve seen a shift in the analytical landscape from traditional OnLine Analytical Processing (OLAP), which was batch-oriented in nature, to real-time OLAP. Instead of generating reports or dashboards which are hours delayed, we now want to analyze data as its being generated - within milliseconds.

Consequently, real-time OLAP databases are getting increasingly popular in the last few years and have seen mainstream adoption in several big companies such as LinkedIn, Uber, Netflix, Cisco, and Bloomberg. As organizations continue to move from batch analytics to real-time analytics, choosing the right OLAP database is an increasingly critical decision. The choice depends on several factors, such as use case, ingestion constraints, query performance, and production readiness, to name a few.

In this article, we present an in-depth comparison of three open-source real-time OLAP databases: Apache Pinot, Apache Druid, and ClickHouse, with the above dimensions in mind. In addition, we will also look at some salient features in-depth to uncover how things work under the hood across different systems.

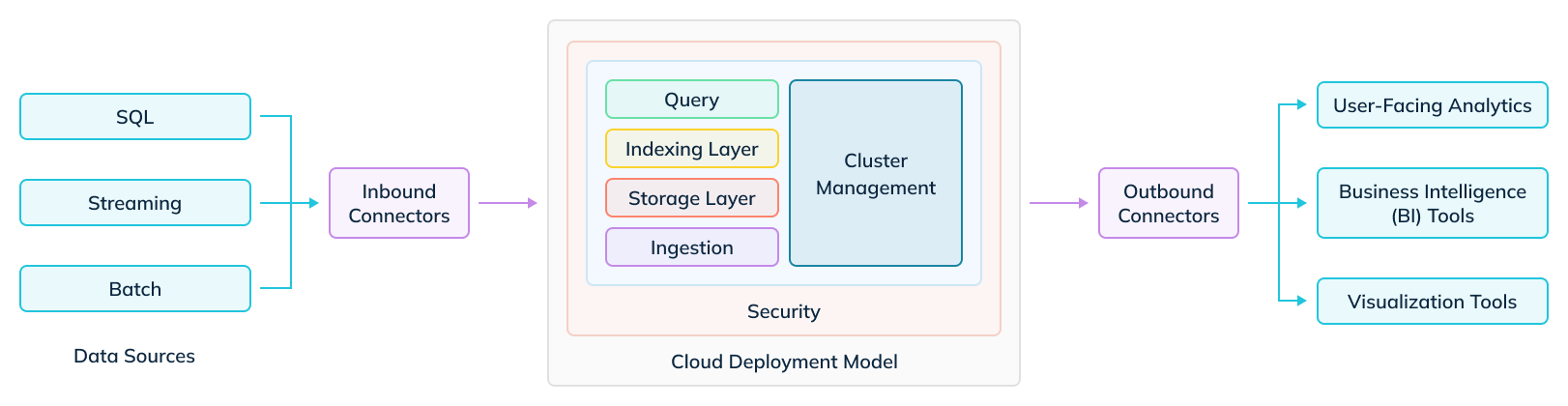

Comparison Structure

Here’s a simplified view of the internal layers of a typical real-time OLAP database. In the following few sections, we will go through each layer and compare the capabilities across the three systems mentioned above. The versions used for each project are Apache Pinot 0.12.0, released on Jan 19, 2023; Apache Druid 25.0.0, released on Jan 4, 2023; and ClickHouse 23.1, released on Jan 26, 2023. Since then, Clickhouse released 22.2 and 23.3; these releases have not yet been incorporated into our analysis.

Fig: Layers of a typical real-time OLAP database

Note: There are some cases where certain capabilities are supported in the respective commercial Database-as-a-Service (DBaaS) product offerings. We will make explicit note of these in the comparison table wherever necessary.

Summary of Comparison: Apache Pinot vs. Apache Druid vs. ClickHouse

Based on our observations, as described below in great detail, here’s a quick summary of the strengths and weaknesses of each system.

Apache Pinot

Highlights

Apache Pinot has been designed for scale and performance with best-in-class indexing strategies and smart data layout techniques used to boost query performance, amongst other things. It has great support for ingesting data from real-time and batch data sources and is the only system that can support upserts in real-time. Overall, it's easy to operate with rich cluster management tools and the only system to support true multi-tenancy. Lastly, the flexible cloud deployment model and best-in-class cloud tiered storage make it a compelling choice for companies of all sizes to build real-time analytical applications.

Needs Improvements

Apache Pinot needs to improve on its data type support, outbound connectors for ease of BI integration, SQL based pre-processing, and some security improvements.

Apache Druid

Highlights

Apache Druid is also designed to handle large-scale data and does a great job of ingesting from real-time and batch data sources. It has great support for data pre-processing during ingestion and also has recently launched a flexible SQL based ingestion approach.

Needs Improvements

Apache Druid currently does not support real-time upserts, which is needed in many critical use cases. It does not support any way to push/write data in real time. It has limited indexing capabilities and no current support for any cloud based tiered storage.

ClickHouse

Highlights

Of all the three systems, ClickHouse is the easiest to get started for new developers with the least number of components. Compared to the other two - it has a better real-time push model (although it also has some limitations). It has great data type support and very rich support for outbound connectors.

Needs Improvements

ClickHouse has poor support for pulling data from real-time sources (see below why this is important). Like Druid, indexing support is also quite limited, which hinders query performance, especially when dealing with a large amount of data. Although single node deployment is easy, running ClickHouse in distributed mode is quite complex. It has some operational limitations in terms of difficulty to scale-up/out nodes, lack of multi-tenancy, and management console. In general, scalability is one of the key challenges with ClickHouse.

We now begin to go deep into each of the layers in detail.

High-Level Architecture: Apache Pinot, Apache Druid, and ClickHouse

To set some context for the rest of the blog, let’s take a high-level look at each system’s architecture.

All of the real-time OLAP databases considered here are column-oriented. Columnar data formats are good for efficiently scanning only selected columns of data, minimizing storage footprint, and scanning through highly redundant data entries (also called “low-cardinality columns”).

They are also optimized to run in a cluster of servers for high availability and horizontal scalability (scale out). However, the ease of scaling and management and the way queries are handled differs greatly across the different implementations, as we will see in the sections to come.

Each of these three databases has the concept of a table, a logical abstraction representing a collection of related data composed of columns and rows. Each table’s data is broken down into small chunks, which are packed in a columnar fashion. These chunks are called segments in Apache Pinot and Apache Druid, and parts in ClickHouse.

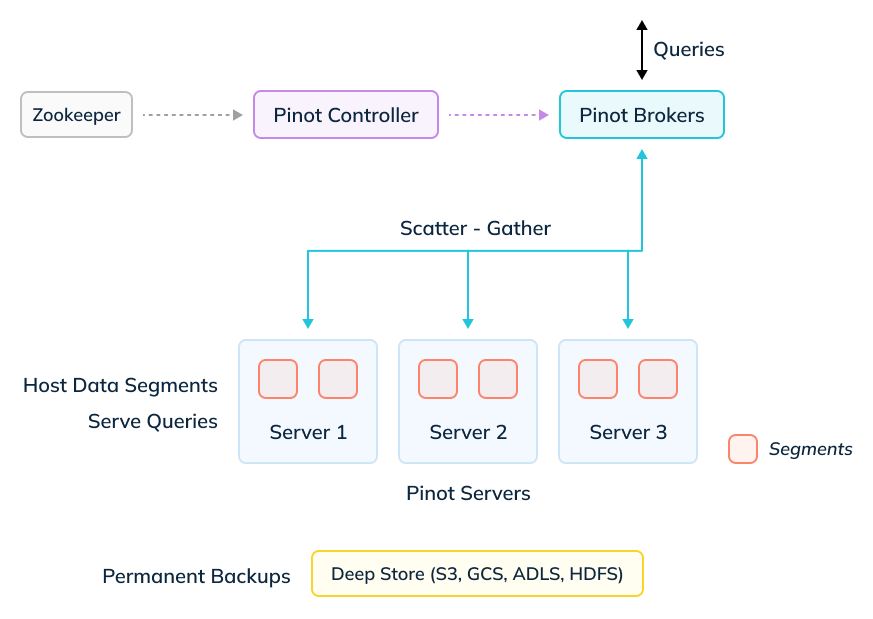

Apache Pinot

Fig: Apache Pinot architecture

Data layer: This layer contains Servers, which store a subset of segments locally and process them at query time. Servers either pull data from streaming sources directly to build segments, or Minions can build and push segments from batch data sources. Segments are also stored onto a deep store—a cloud object store such as Amazon S3, GCS, ADLS—for permanent backups and recovery.

Query layer: This layer contains Brokers, who receive queries from clients and perform a scatter gather to and from Servers.

Control layer: This layer contains the Controllers, which manage all the components of the cluster. Apache Zookeeper is used as a persistent metadata store.

Read more details in the Pinot architecture docs.

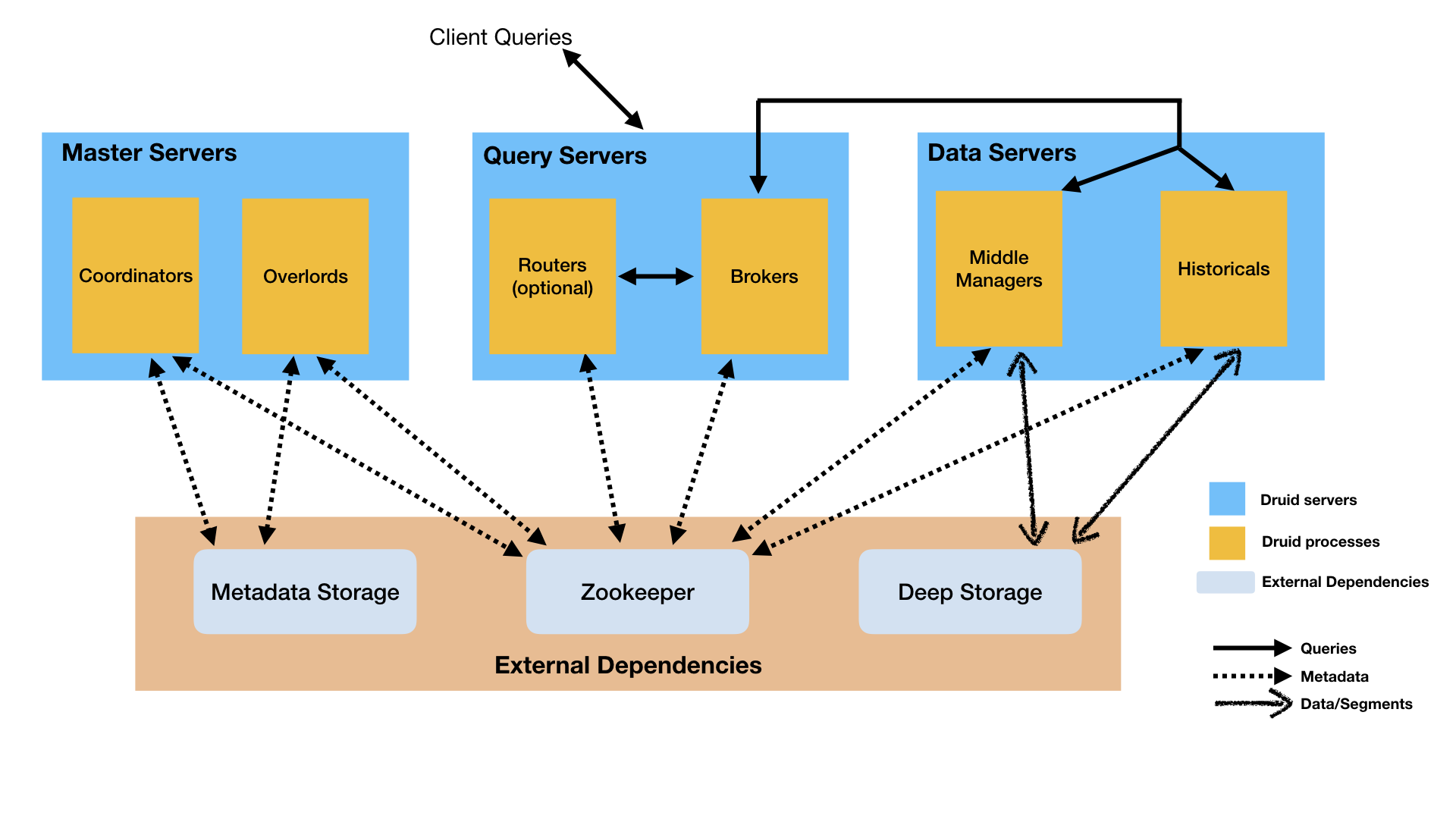

Apache Druid

Fig: Apache Druid Architecture

Data layer: This layer consists of Middle Managers, which execute ingestion workloads, and Historicals, which store all queryable data. Segments are stored in a deep store for permanent backup and recovery.

Query layer: This layer contains the Brokers, which handle queries from external clients, and the optional Routers, which route requests to Brokers, Coordinators, and Overlords.

Control layer: This layer contains the Coordinator which manages data availability on the cluster, and the Overlords, which control the assignment of data ingestion workloads. Zookeeper is used for internal service discovery, coordination, and leader election. A system like MySql or Postgres is used for segment and task metadata storage.

Read more details in the Druid design docs.

ClickHouse

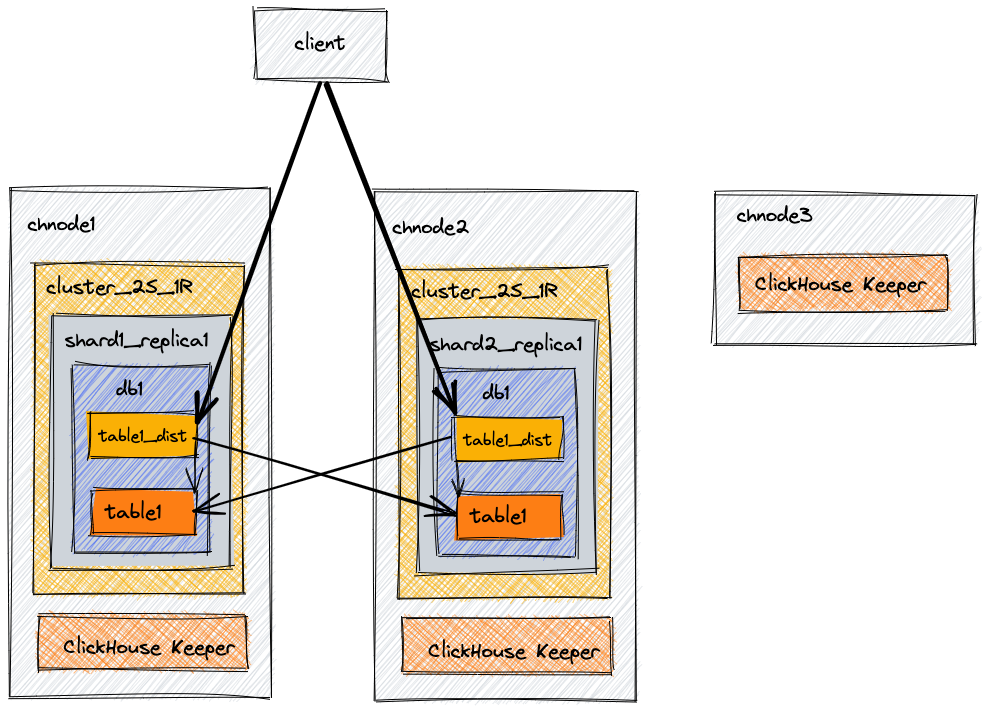

Data layer: This layer contains the ClickHouse Servers, which host the data shards and manage ingestion. ClickHouse has no concept of a deep store for permanent storage.

Query layer: There is no architectural separation between data and query layer. A query can be directly made to any ClickHouse server to query data on that server. Of course, data is usually distributed, so a Distributed Table would be created on some dedicated nodes to act as query nodes.

Control layer: ClickHouse Keeper / Apache Zookeeper is used for metadata. No other centralized cluster management takes place.

Read more details in the ClickHouse "scaling out" docs.

Overall, ClickHouse has the fewest moving parts, followed by Pinot, then Druid. ClickHouse achieves this by combining many functionalities onto the same node or skipping some altogether.

While this makes getting started with ClickHouse relatively easier, several challenges arise when you hit a certain scale that comes with any practical setup.

Scalability: Separation of concerns is always a better design for scalability – e.g. In Pinot if the rate of events goes up one can simply scale the real-time servers minimizing the impact on query latency if queries per second increase one can simply scale the brokers.

Operational complexity: Users have to build tooling and automation on their own for every skipped functionality – e.g. distributed query routing, or cluster management operations such as replication, ingestion, and node maintenance in ClickHouse have to be set up manually, which is automatic and out-of-the-box in Pinot and Druid, making them more operator-friendly for managing production workloads.

Data Sources

This section gives a very high-level view of native ingestion support for various kinds of data sources. The corresponding in-depth details are captured in the ingestion section below.

Note: Native here means no external component is needed for that capability.

Real-Time Data Sources

Native, out-of-the-box support for real-time data sources:

| Real-time data source | Apache Pinot | Apache Druid | ClickHouse |

|---|

Table: Real-Time Data Source: a comparison of Apache Pinot, Apache Druid, and Clickhouse

Batch Data Sources

Native, out-of-the-box support for batch sources:

| Batch data source | Apache Pinot | Apache Druid | ClickHouse |

|---|

Table: Batch Data Sources: a comparison of Apache Pinot, Apache Druid, and ClickHouse

Note: Although ClickHouse mentions the above systems in the integrations section, that’s primarily for query processing and not for data ingestion.

SQL Data Sources

Native, out-of-the-box support for SQL sources:

| SQL data source | Apache Pinot | Apache Druid | ClickHouse |

|---|

Table: SQL Data Sources, a comparison of Apache Pinot, Apache Druid, and ClickHouse

Note: Although ClickHouse mentions the above systems in the integrations section, that’s primarily for query processing and not for data ingestion.

Ingestion

Before a database can run analytical queries, the data must get into the system. This is the process of ingestion. This layer deals with all the capabilities required for ingesting data into the OLAP system from various kinds of data sources, as mentioned above. As we go through the details of each data source, here are the key things to keep in mind:

Append only vs upserts: Append only ingestion is the minimum requirement from an OLAP database. But supporting upserts provides data and query consistency.

Pull vs push: Can the OLAP database support a pull / push based ingestion or both. Each one has its pros and cons.

At least once vs exactly once: At-least-once semantics is the minimum requirement to ensure there’s no data loss. Having the ability to do exactly once data ingestion is great for certain business critical applications.

Real-Time Ingestion

Ingesting data from real-time data sources like Apache Kafka is integral to real-time analytics. In this section, we will look at the different ways of ingesting real-time data and compare the nuances of each system.

Pull vs Push Based Real-Time Ingestion

There are two ways of ingesting real-time data:

Pull based: Actively poll/fetch data from the real-time data source

Push based: Push messages directly into the database

A native pull-based ingestion model is generally considered to be better than a push-based model for real-time data ingestion. Here are the reasons why:

Fewer moving parts: A native pull-based approach works great with systems like Apache Kafka and Amazon Kinesis and obviates the need for an intermediate connector (e.g., the Kafka Connect framework). This improves overall freshness and error probability by reducing the number of hops in the pipeline.

More scalable: Based on empirical data, the pull-based approach supports higher throughput than a corresponding push-based approach.

Ease of replication: The pull-based model makes it very easy to replicate data since all replicas can fetch independently from the data source. In addition, strict partition ordering within the data source also alleviates the need for strong coordination among replicas to keep them in sync. For instance, replicas can all agree on the same Kafka offset to ensure data synchronization. A push-based model has to deal with the overhead of how to keep the replicas in sync.

Ease of exactly once semantics: A pull-based model makes it easier to guarantee exactly once semantics with systems like Kafka. Arguably, a push based model has to do more work to ensure correctness in this case.

Need for Push Based Ingestion

There are certain cases where push based ingestion is preferred. For instance, in some cases, the real-time data might be generated directly by an application/service without any Kafka in the picture. In other cases, even though data might exist in a Kafka cluster, pull based ingestion may not be possible due to network connectivity issues (eg: Kafka in another VPC without any inbound access). For all such cases, we need the ability to push events directly to the database.

Next, we’ll go through each such mechanism in more detail.

Pull Based Ingestion

| Capability | Apache Pinot | Apache Druid | ClickHouse |

|---|

Table: Pull-Based Ingestion, a comparison of Apache Pinot, Apache Druid, and ClickHouse

Apache Kafka Integration: Apache Pinot & ClickHouse comparison

It can be said that the real-time analytics journey began with Apache Kafka. The popularity and widespread adoption of Kafka in nearly every major company worldwide has made it one of the most important sources of real-time data. Apache Pinot was designed to integrate well with Apache Kafka and has excellent support for handling a high ingestion rate, out of order events, and exactly once semantics.

As noted in the table above, Druid and Pinot are very similar in terms of Kafka integration, whereas ClickHouse took a very different approach. This section will focus on these differences between Pinot and ClickHouse.

Low-Level vs Consumer groups

Internally, ClickHouse uses Consumer Groups within the Kafka Table Engine to fetch data from Kafka. This has many issues, including message duplication, scalability challenges, fault tolerance, and so on, as described in this talk about Pinot’s journey away from the Consumer group approach. Conversely, Pinot evolved to use a low-level Kafka consumer approach that addresses all these issues.

Ingestion Latency

Ingestion latency is defined as the amount of time between an event being produced at the source and the event being queryable in the database. Kafka Table Engine in ClickHouse reads messages in large batches. Default is mentioned as 64K, and higher batch sizes are recommended (500K to 1 Million entries per batch are not uncommon). Needless to say, this will result in higher ingestion latency. Pinot, on the other hand, is able to stream data in much smaller batches - almost 3 orders of magnitude lower thus leading to much lower ingestion latency without adding any additional overhead.

Data Duplication

The recommended pull ingestion mechanism in ClickHouse is not only complex, but it can also result in data duplication. The recommended way to resolve those is to use ReplacingMergeTree, which has its own issues as discussed in the upsert section. On the other hand, since Pinot generates segments in lockstep with Kafka offsets - it's guaranteed to not generate any duplicates (unless duplicates are present in the Kafka stream itself, which can also be handled by Pinot - see Upsert section below).

Scalability

In ClickHouse, the number of servers is limited by the number of Kafka partitions and, therefore, difficult to scale. In the case of Pinot, segments can be automatically moved to different hosts (non-consuming), which makes it very easy to scale and not limited by the number of Kafka partitions.

Data Replication

As mentioned previously, a pull based approach makes data replication in Pinot much simpler by relying on Kafka offsets for coordinating across replicas. On the other hand, since ClickHouse does not have a pure pull model, it has to rely on a much more complex replication system. For each batch of data inserted into the ReplicatedMergeTree family table, metadata is written to Zookeeper / ClickHouse Keeper. It's important to note that this happens in the fast path of data ingestion, and having a strict dependency on Zookeeper / ClickHouse Keeper is quite a bit of an overhead.

Cloud Readiness

It appears that Kafka Table Engine is not supported in ClickHouse Cloud and the recommendation is to use alternate approaches (eg: push based techniques). Conversely, Pinot has productionized Kafka integration over the last several years and is fully supported in StarTree Cloud.

Push Based Ingestion

As mentioned before, push based ingestion is often needed to get around Kafka dependency or network connectivity issues. Let’s take a look at how it works in the three systems:

| Capability | Apache Pinot | Apache Druid | ClickHouse |

|---|

Table: Push-Based Ingestion, a comparison of Apache Pinot, Apache Druid, and ClickHouse

Real-Time Upserts

In the case of real-time event streaming data sources such as Apache Kafka, duplicate or upsert events may exist in the stream. Several mission-critical use cases require the database to handle such events during ingestion to ensure accurate query results. Uber’s financial dashboards, Citi’s risk monitoring, and many others rely on upserts to ensure business continuity. This section compares how the different systems handle such events.

| Capability | Apache Pinot | Apache Druid | ClickHouse |

|---|

Table: Real-Time Upserts, a comparison of Apache Pinot, Apache Druid, and ClickHouse

Note that both Apache Druid and ClickHouse claim the ability to do upserts. However, it requires complex workarounds such as batch insertions, query time aggregations, or a complex data model that is difficult to generalize.

Upserts in Pinot

Pinot has great support for handling upsert events in the incoming real-time stream for event-streaming technologies like Kafka. This is done without sacrificing ingestion and query performance and maintains real-time data freshness — which is not true in the case of the other systems.

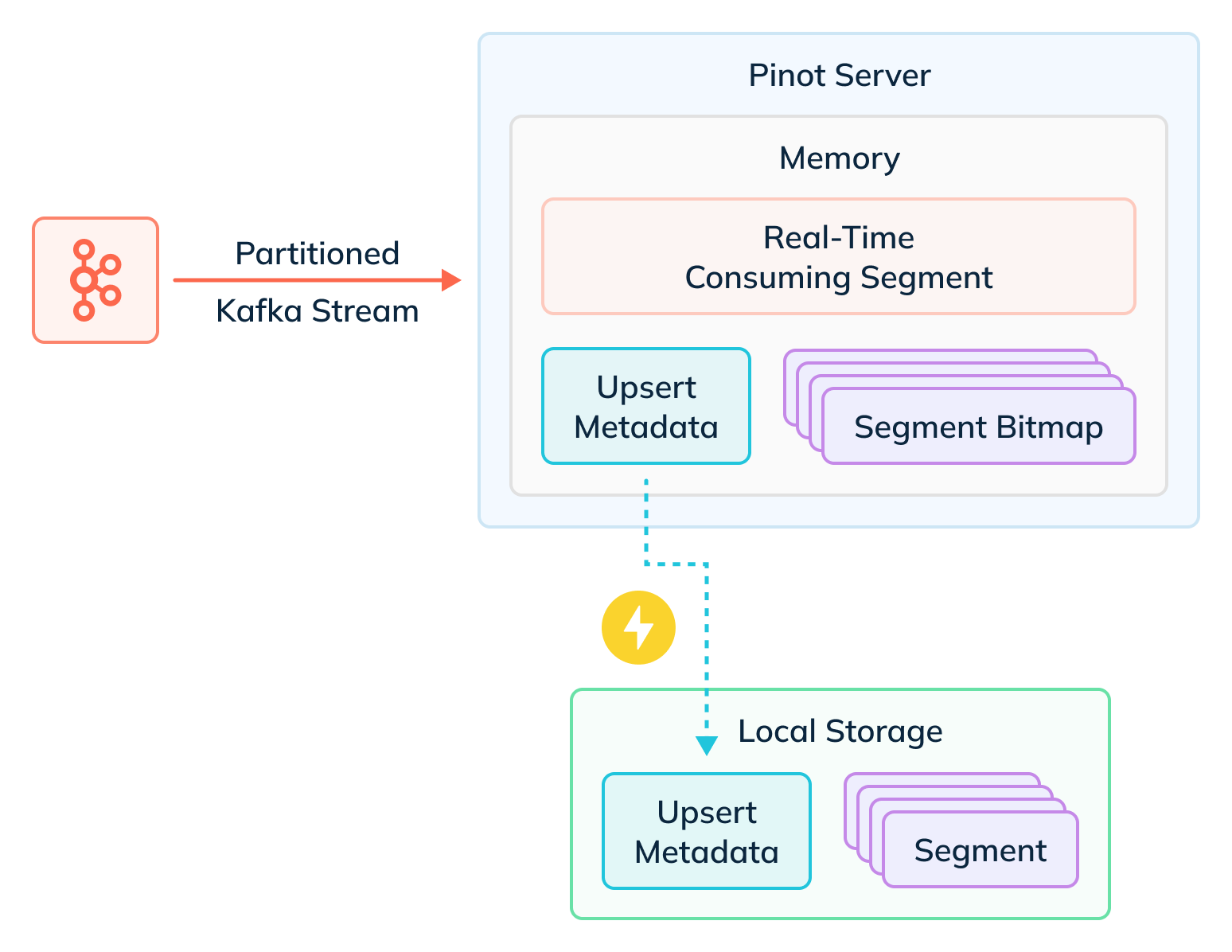

How does it work?

Users can enable upserts in a real-time Pinot table by specifying a primary key (see the Stream Ingestion with Upsert docs). Each server in turn maintains a map of the primary key to the location of the latest version of the record for that key. When an existing record is upserted by a new one, the server uses this map to mark the old record as obsolete using a per segment bitmap. All such obsolete records are then filtered out during query execution. This design ensures least overhead during ingestion and query time and works at scale.

Fig: Upserts in Apache Pinot

Scalable Upserts with StarTree Cloud

One caveat of upserts in Pinot was the memory overhead experienced by Pinot servers when a large number of primary keys are involved. StarTree Cloud removes this limitation by eliminating the in-memory requirement of managing upsert metadata. StarTree Cloud workspaces can easily handle billions of primary keys per server without sacrificing performance.

Upsert Improvements for Pinot (Coming Soon)

You can read more about additional improvements coming to Apache Pinot in this related blog.

Some of the highlights related to upserts include:

Time To Live (TTL): for the primary keys — helps in reducing the footprint of the upsert metadata and thus memory overhead on the server.

Compaction: ability to delete obsolete records and improve storage efficiency

Ease of bootstrap: Bootstrapping historical data in an upsert table is currently challenging. Some users rely on a Flink Pinot connector to push historical segments in the real-time upsert enabled table. We will be adding native support for this shortly.

Batch Data Ingestion

A native, out-of-the-box support for importing data from batch data sources (e.g., S3 or GCS) is crucial for developer productivity. Otherwise, users must maintain custom data pipelines, which becomes a big overhead to maintain and scale up.

| Capability | Apache Pinot | Apache Druid | ClickHouse |

|---|

Table: Batch-Based Ingestion, a comparison of Apache Pinot, Apache Druid, and ClickHouse

SQL Data Ingestion

SQL data ingestion can be done in 2 ways - using CDC (Change Data Capture) in real-time or in a batch manner. This section covers the batch oriented ingestion from SQL data sources.

| Capability | Apache Pinot | Apache Druid | ClickHouse |

|---|

Table: SQL Data Ingestion, a comparison of Apache Pinot, Apache Druid, and ClickHouse

Batch and SQL ingestion support in StarTree

StarTree provides a native, out-of-the-box support for ingesting the batch and SQL data sources mentioned above. Read No-code Batch Ingestion for Apache Pinot in StarTree Cloud for more details. This is done by a background job that runs alongside the primary cluster.

Key benefits:

Easy to use: There is no need for additional ETL tools for most common scenarios.

Efficient: Segments are generated by the background job outside the primary cluster and pushed to the server serving live queries, with negligible impact

Robust: Ingestion tasks are checkpointed and retried upon failures

Scalable & cost efficient: auto-scaling is enabled in StarTree cloud for elastic scale-up/down of the ingestion tasks

Ingestion time pre-processing

Often, data needs to be pre-processed before it can be ingested and queried in Pinot. Examples include flattening a complex JSON structure, rolling up data, or type/value transformation. Let’s look at some of the popular pre-processing capabilities requested by users.

| Capability | Apache Pinot | Apache Druid | ClickHouse |

|---|

Table: Ingestion Time Pre-Processing, a comparison of Apache Pinot, Apache Druid, and ClickHouse

Table Management

Table data often needs to undergo some change after ingestion to keep up with changes in the data or the workload. This section compares how the three systems fare in this area.

| Capability | Apache Pinot | Apache Druid | ClickHouse |

|---|

Table: Table Management, a comparison of Apache Pinot, Apache Druid, and ClickHouse

Schema Management

This section compares the three systems on schema characteristics, such as native support for data of varied types and being able to evolve the schema.

| Capability | Apache Pinot | Apache Druid | ClickHouse |

|---|

Table: Schema Management, a comparison of Apache Pinot, Apache Druid, and ClickHouse

Storage

As mentioned earlier, each of these databases has a columnar storage format.

At a columnar level, there are differences in compression & encoding schemes which further affect the storage footprint and access latencies.

At a segment level, data layout configuration options help increase pruning.

At a server level, smart storage mapping strategies help drastically reduce query-fanout, further reducing latency and boosting throughput.

At a cluster level, the availability of storage options (multi-volumes, cloud object storage) and the ability to create storage tiers for hot / cold data to control cost-latency trade off, greatly improves cost to serve.

This section looks at the above aspects for the three systems.

| Capability | Apache Pinot | Apache Druid | ClickHouse |

|---|

Table: Storage, a comparison of Apache Pinot, Apache Druid and, ClickHouse

Data Layout: Strategies to Reduce Query Fanout

This blog post talks about the lifecycle of a query in Apache Pinot and the optimizations available at each point - from broker, to servers, to segments, to columns - to reduce the amount of work done. This section focuses on query fanout – reducing the number of nodes a query is scattered to. Reducing the fanout helps eliminate long tail latency (your query will be as slow as the slowest server) and, in turn, helps improve throughput.

The strategies available in Pinot to map the data segments onto servers and enhance pruning at broker level are:

Balanced: This strategy simply distributes segments evenly and is also the only strategy adopted by Druid and ClickHouse.

Replica-group: This strategy creates groups of servers such that an entire replica of the dataset is constrained to each group, thus reducing server fanout and being able to scale horizontally for higher throughput.

Partition-based replica-group: This strategy further creates partition based groups to reduce segment fanout within a replica group.

The strategies available in Pinot to map the tables onto servers and improve ease of operation are:

Creating pools for a no-downtime rolling restart of large shared clusters

Fault-domain aware instance assignment

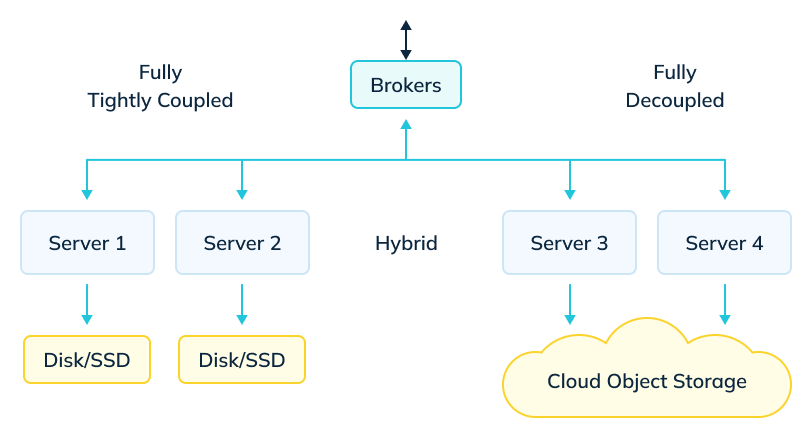

Tiered Storage using Cloud Object Storage

Each of the three systems started with having tightly coupled storage and compute, wherein they used compute nodes that included local or directly attached high performance storage, such as NVMe SSDs. This is one of the reasons that make them suitable for low latency.

However, local high-performance storage is expensive compared to decoupled systems that primarily use cloud object storage. Cloud storage is cheaper but slower and, therefore, usually unsuitable as the sole storage layer for interactive real-time analytics. Simply choosing one of these two systems for all analytics needs does not make sense.

Real-time (or recent) data tends to be more valuable and is queried more frequently. On the other hand, historical data is queried less frequently. Latency is still important, but with this data being large and ever growing, cost becomes a dominating factor. Data teams often choose a hybrid approach where they add both systems and migrate the data from one to another depending on cost / latency expectations. Therefore, many users need to consider how to span their data, choosing what they wish to store in high performance local storage and what is placed on slower but more cost-effective cloud storage.

Fig: Tiered Storage for Apache Pinot in StarTree Cloud

Tiered storage for Apache Pinot in StarTree Cloud is designed to address this problem. With the option to use cloud object storage such as S3 / GCS directly in the query path, Pinot can now act as a hybrid system — it can use completely decoupled or tightly-coupled storage or have a mix of both. It lets you flexibly configure data to be on any tier and query it all seamlessly like any other table.

Most importantly, several investments have been made to reduce the latency issues that come with using decoupled storage. For instance, the design consciously stays away from approaches such as lazy loading — where entire segments would be downloaded during query time — or using local caches. Typical OLAP workloads consist of arbitrary slice-and-dice over varying time ranges, and caching-based approaches cannot offer predictable p99 latency due to the frequent download and churn of segments.

Instead, the tiered storage approach in Pinot for reading from cloud object storage uses strategies such as selective columnar / block reads, smart prefetching, pipelining fetch and compute. These optimizations, along with the ability to use the army of native indexes available in Pinot, help Pinot achieve the best of both worlds — reduced cost to serve while keeping the speed of Pinot.

Indexing Strategies

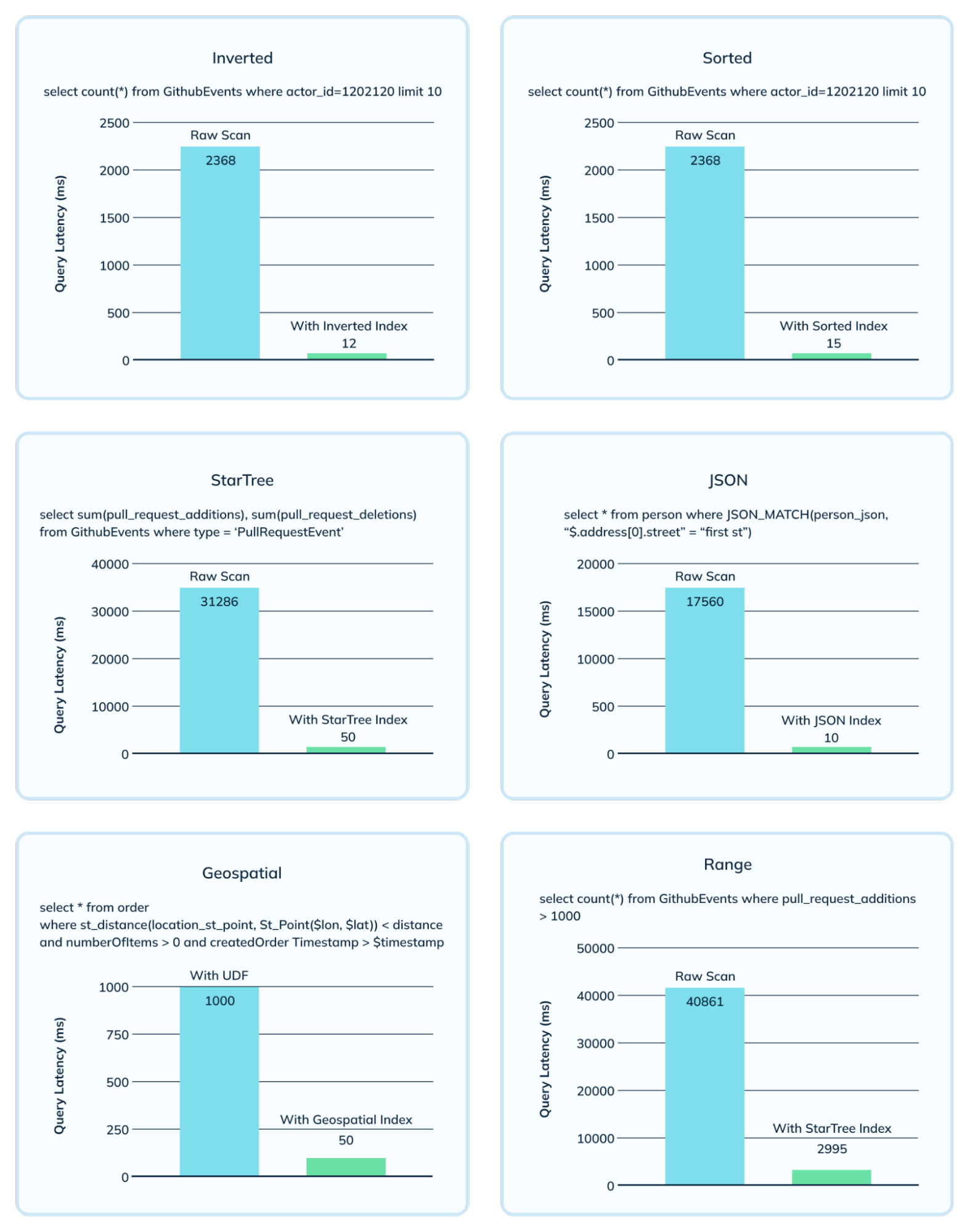

Indexing is a fundamental function of a real-time OLAP database which ensures queries can be executed efficiently and perform minimum data scans. In this section, we will compare the indexing strategies available across the three systems and also dive into why this is important.

| Type of Index | Functionality | Apache Pinot | Apache Druid | ClickHouse |

|---|

Table: Indexing Strategies, a comparison of Apache Pinot, Apache Druid, and ClickHouse

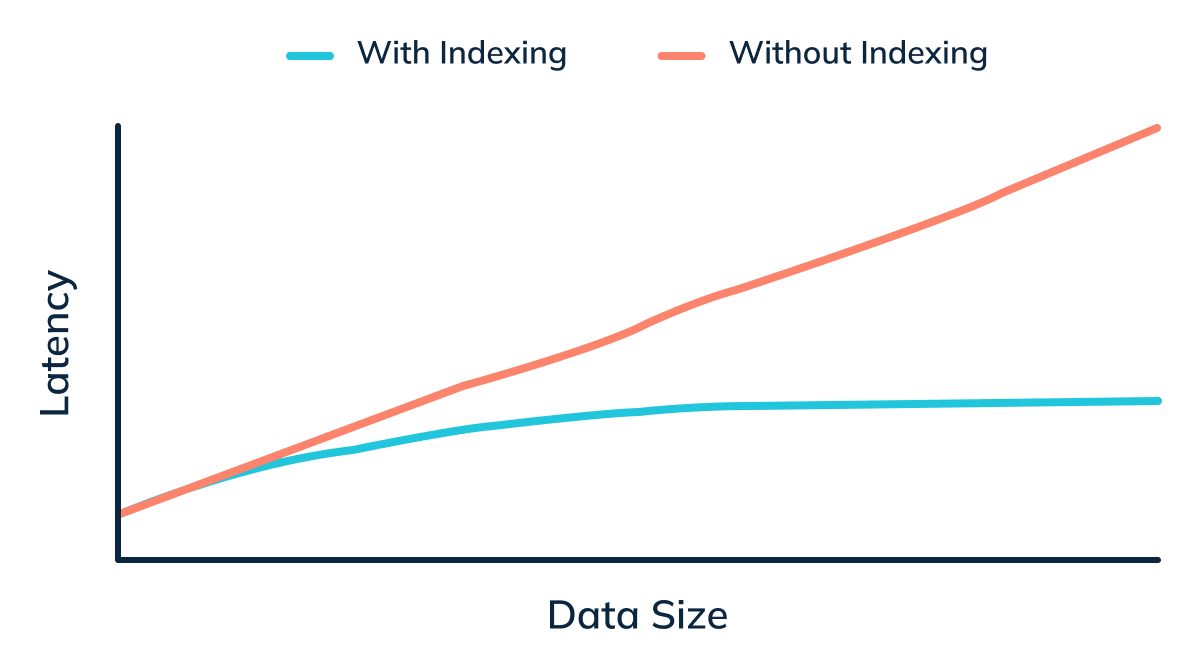

Why do Indexes Matter in an OLAP Database?

Indexes help minimize the data scans needed for processing a given OLAP query. This is important to ensure query performance is optimal and predictable regarding high throughput and latency, even as the underlying data keeps growing organically.

Fig: Impact of indexes on query latency with increasing data size

Apache Pinot natively supports a wide range of indexing strategies, making it the ideal OLAP platform for myriad use cases (as discussed here). In addition, it has a pluggable architecture, making it extremely easy to add new indexes as and when needed. For instance:

Star-Tree Index, Pinot’s way of building intelligent materialized views is crucial for running user-facing analytical applications. Specifically, it allows users to pre-aggregate data in a tunable fashion, leaving the source data intact. This enables highly concurrent, low latency key-value style queries. Any changes in the pre-aggregation function or corresponding dimensions can be handled dynamically without having the need to re-ingest all the data.

JSON index and text index are great at processing semi-structured data in sub-seconds (eg: analyzing system and application logs at scale)

Geo index accelerates geospatial queries, which are required by many logistics and delivery companies around the globe, as well as various other real-world location-based services.

Here’s a quick view of the power of indexes - comparing query latencies with and without the corresponding index.

Fig: How Apache Pinot’s indexes bring down the latency compared to a raw scan

As depicted above, indexes help reduce the query latency by many orders of magnitude. In addition, it reduces the amount of work and resources needed per server, thus increasing the system's overall throughput.

Query

This section compares the three systems in all aspects related to querying the database. It discusses commonly used query constructs in OLAP and advanced multi-stage query features such as joins and window functions. It finishes with query latency optimizations and features such as caching, routing, and pruning strategies.

| Capability | Apache Pinot | Apache Druid | ClickHouse |

|---|

Table: Querying, a comparison of Apache Pinot, Apache Druid, and ClickHouse

Joins

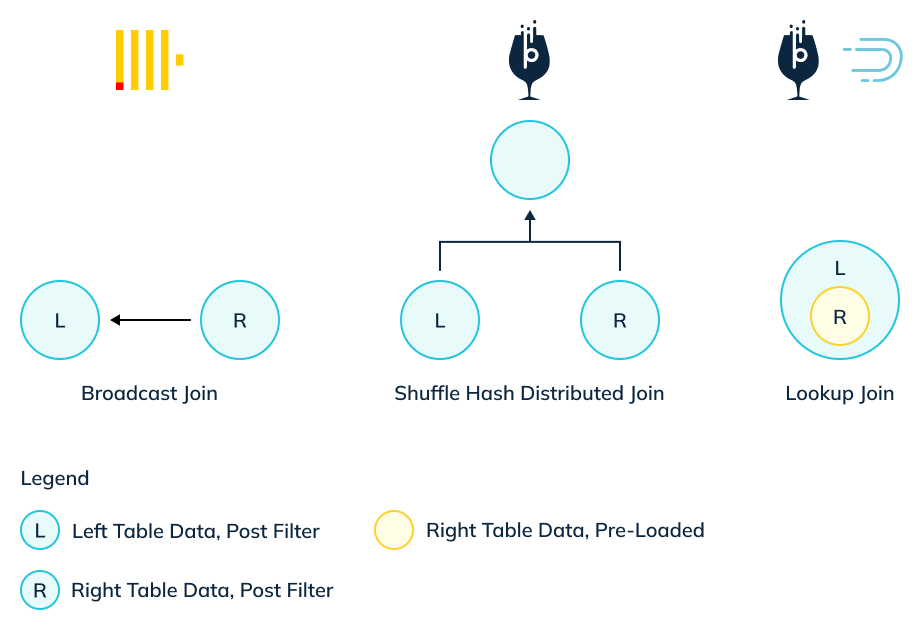

A Join query fundamentally works on two parts - a left table and a right table. There are three ways to execute a join.

Broadcast join: All data post filtering from the right table (R), is shipped to all nodes (N) of the left table (effective data moved R * N). This works well for cases when the filtered data being shipped is small and the number of nodes is also small. But query latency increases proportionately as the size of R increases or the number of nodes N increases. This is the only algorithm that ClickHouse applies for joins.

Shuffle distributed hash join: After applying filters, data from both sides (L and R) is hashed on the join key and shipped to an independent shuffle stage (effective data moved L + R). This scales well even if the size of data on either side increases. Plus, the performance is unaffected by the number of nodes on either side. Pinot is the only system that can execute joins using this algorithm.

Lookup join: Right table must be pre-loaded (replicated) on all nodes of left table. This can only work for very small right hand datasets. This is the only kind of join that Druid can execute. This is available in Pinot as well, for small dimension tables.

In a separate blog post, we will follow up with more details about the nuances of join implementation for all systems, so stay tuned.

Materialized Views

Materialized views (MVs) create a virtual table only using a subset of data from a base table, such as a select number of columns pertinent to a specific query. Users commonly use this to optimize query performance for well-known query patterns. Here’s how these different systems have implemented materialized views:

| Capability | Apache Pinot | Apache Druid | ClickHouse |

|---|

Table: Materialized Views, a comparison of Apache Pinot, Apache Druid, and ClickHouse

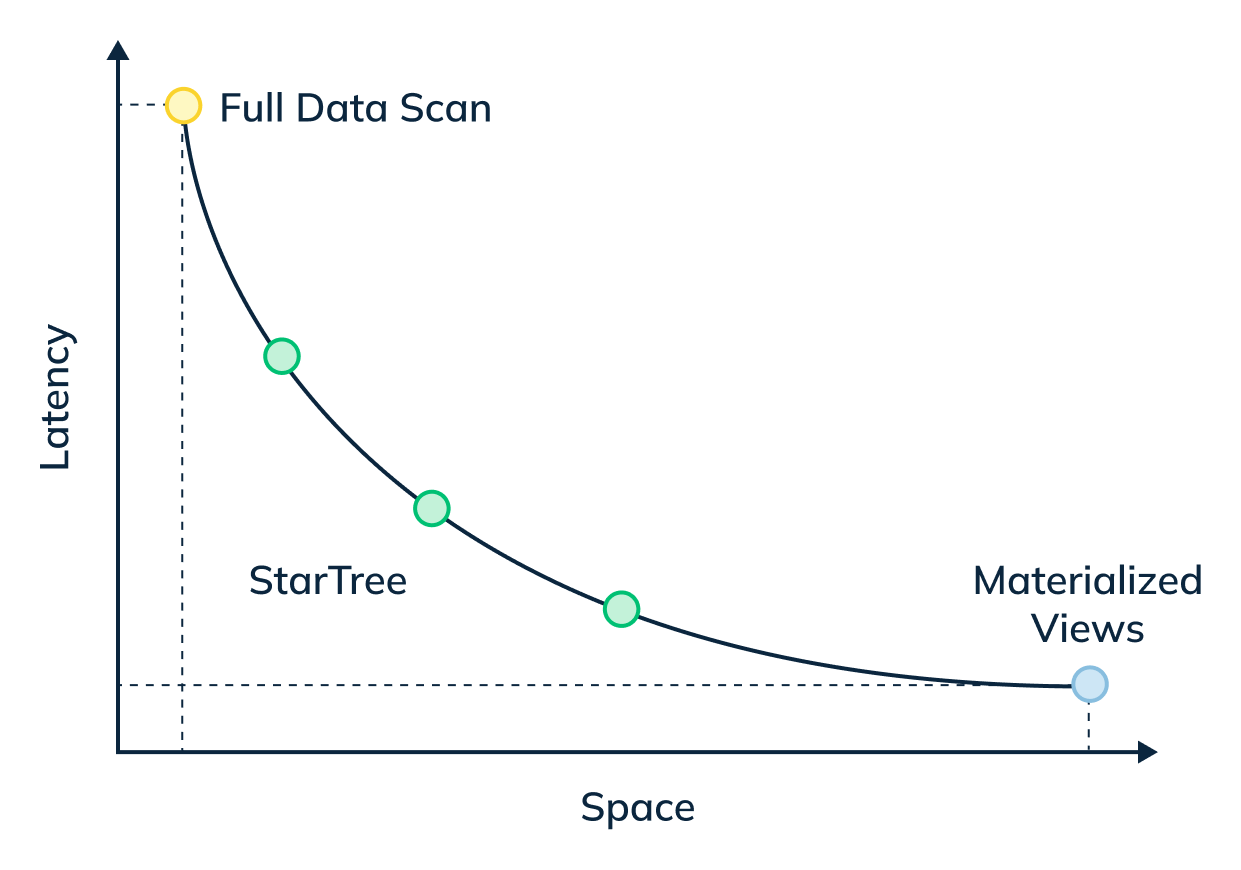

Star-Tree Index: Pinot’s intelligent materialized view

The star-tree index provides an intelligent way to build materialized views within Pinot. Traditional MVs work by fully materializing the computation for each source record that matches the specified predicates. Although useful, this can result in non-trivial storage overhead. On the other hand, the star-tree index allows us to partially materialize the computations and provide the ability to tune the space-time tradeoff by providing a configurable threshold between pre-aggregation and data scans.

Fig: star-tree index lets you choose your space-time tradeoff between pre-aggregating everything (a materialized view) or doing everything on-the-fly

The star-tree index allows us to pre-compute values of an aggregation function across the dimensions specified in a given materialized view. A configurable parameter called maxLeafRecords determines the maximum rows that need to be scanned to compute an aggregation. In other words, if maxLeafRecords is set to 1 then we get full materialization - same as a traditional MV. Setting this to a higher value results in significant space savings with a small query overhead.

Key benefits:

User controllable: Tune space vs. time overhead

Flexible: create any number of indexes. The right index is chosen based on the query structure.

Transparent: Unlike traditional MVs, users don’t need to know about the existence of a star-tree index. The same query will be accelerated with a star-tree index in place.

Dynamic: Very easy to generate a new index at any point of time.

Learn more about the star-tree index here.

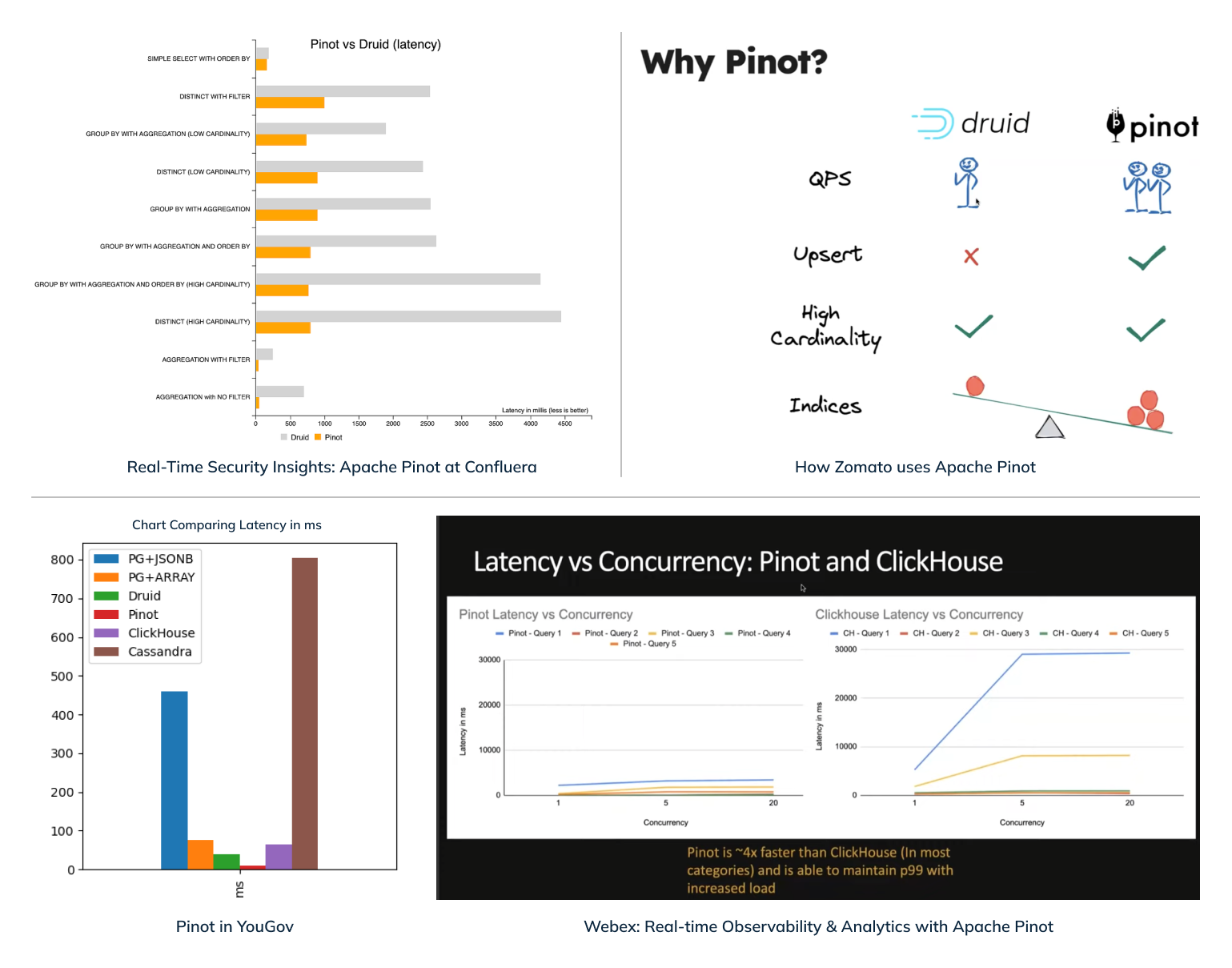

Query Performance

Performance benchmarking is highly use-case, query pattern, and dataset dependent. We recommend you evaluate query performance on your datasets across these systems. Here are some benchmarking numbers provided by neutral third parties:

Fig: Query performance benchmark graphs from the various sources as listed below

Cisco did an evaluation comparing ClickHouse and Pinot. View the setup details and results in Webex: Real-Time Observability & Analytics with Apache Pinot. A quote from the presentation – “Pinot is 4x faster than ClickHouse (in most categories) and is able to maintain p99 with increased load.”

This engineering blog post titled Real-time Security Insights: Apache Pinot at Confluera talks about their benchmarking done on Druid and Pinot. The results showed queries on Pinot were 2x - 4x faster than on Druid and could sustain a much higher throughput.

This blog post titled Pinot in YouGov discusses a comparison they did with several technologies, and Pinot was 5x - 7x faster than Druid and ClickHouse.

This session How Zomato uses Apache Pinot talks about how Pinot could handle 2x the query throughput compared to Druid using the same cluster size.

Cluster Management

This section compares the systems on operability, which comprises several aspects such as, ease of cluster operations (scaling, maintenance, disaster recovery), visibility into cluster status, flexible cluster setup options to enhance manageability and help maintain SLA guarantees, and so on.

While all three databases are designed for high availability (HA), and each seeks to avoid single points of failure (SPOFs), each handles cluster component failures differently and thus provides differing overall reliability and resiliency.

| Capability | Apache Pinot | Apache Druid | Clickhouse |

|---|

Table: Cluster Management, a comparison of Apache Pinot, Apache Druid, and ClickHouse

Security

Here, we compare the key security requirements needed for an enterprise-grade real-time OLAP database.

| Capability | Apache Pinot | Apache Druid | ClickHouse |

|---|

Table: Security, a comparison of Apache Pinot, Apache Druid, and ClickHouse

Cloud Deployment Model

Finally, we look at how easy it is to deploy these three systems as a fully-managed cloud solution, requiring a commercial Database-as-a-Service (DBaaS) provider. Users can, of course, self-deploy any open-source databases to the public cloud as a self-managed solution.

| Capability | Apache Pinot (StarTree Cloud) | Apache Druid (Imply) | ClickHouse (ClickHouse Cloud) |

|---|

Table: Cloud Deployment Model, a comparison of StarTree Cloud, Imply, and ClickHouse Cloud

StarTree’s Bring Your Own Cloud (BYOC) deployment model

StarTree provides a fully managed BYOC offering in which the data never leaves the customer’s cloud account. This is tremendously beneficial for the customer who retains full control over the data and access to that data without having to deal with the complexities of running a production grade Pinot cluster.

Here are the key benefits of this model:

Data security/governance: Data never leaves the customer's cloud perimeter. Customers also retain full control over data access.

SLA: StarTree customers get the same SLA as the corresponding SaaS model

Fully managed: StarTree is responsible for handling deployment, upgrades, routine maintenance, backups / recoveries, and capacity planning on behalf of the customers.

Principle of least privilege: StarTree Cloud runs with the least privilege model for operating and managing BYOC clusters with the exception of the one time deployment.

No VPC peering needed: StarTree’s control plane does not need any VPC peering with the remote data plane. This makes it frictionless to deploy and scalable across multiple deployments

Cost Effective: Customers can fine-tune the cluster configuration for cost optimization regarding instance type, storage type, and avail of their cloud discounts as applicable.

Outbound Connectors

The ability to connect these databases with Business Intelligence (BI) or visualization tools is crucial for doing ad-hoc analysis on real-time data. Here is how the three systems compare in terms of outbound connectors:

| Connector | Apache Pinot | Apache Druid | ClickHouse |

|---|

Table: Outbound Connectors, a comparison of Apache Pinot, Apache Druid, and ClickHouse

Feedback

We hope you enjoyed reading this comparison! We’ve tried our best to ensure that all the content is accurate and to the best of our knowledge. However, it's possible that we missed something or overlooked some details. Plus, of course, this is a point-in-time analysis. Future updates to any of these databases could require revisions to the information provided above. Please contact us if you have any feedback, and we’d be happy to incorporate it!

Intrigued by Apache Pinot? Try it out!

Perhaps the analysis you’ve read above has gotten you curious about Apache Pinot. If so, you are free to download open-source Apache Pinot. Or, if you want a fully-managed experience, you can sign up to try StarTree Cloud, built on Apache Pinot, free for 30 days.

Acknowledgements:

Thanks to Rachel Pedreschi for the very thorough review and insights on Apache Druid, Xiaobing Li for reviewing and helping us dig deep into ClickHouse, and Shounak Kulkarni for validating some of our findings and assumptions on real cluster setups for all three systems!

Many thanks to Madison Sorenson for making the beautiful illustrations. And to all our internal reviewers and editors –Peter Corless, Tim Berglund, Kishore Gopalakrishna, Kulbir Nijjer, and Allison Murphy– for the valuable feedback and direction!

Changelog

4/18/2023 - Original publish date

Apache PinotIndustry InsightsTechnology

StarTree Named a Leader in Real-Time Analytics!

Discover why StarTree was recognized as both a leader and fast mover in the latest GigaOm report